Outlier Management for Robust Visual SLAM in Dynamic Environments with Easy Map and Camera Pose Initialization

Abstract

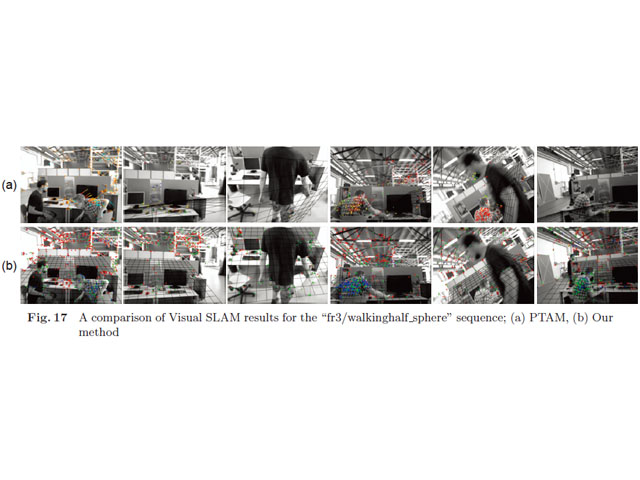

We present a robust monocular visual simultaneous localization and mapping (Visual SLAM) method, an algorithm capable of estimating robust camera poses in dynamic environments and reducing the user operation load for Visual SLAM initialization. In dynamic environments, standard monocular Visual SLAM using 3D/2D matched points between a recovered 3D map and feature points on a current frame tends to fail because the 3D map is distorted by moving objects with duration. To address this issue, we classify outliers from a robust estimator in the camera pose estimation into feature points on moving objects and mismatched points on occlusions, specular reflections, textureless regions, and so on. To achieve this, we first construct an angle histogram based on outlier flows that are vectors between reprojected points and matched points at a current frame, then approximate the obtained angle histogram using a mixture of Gaussian functions. Finally, we estimate the parameters for Gaussian mixtures by using an expectation maximization algorithm. We also introduce weighted tentative initial values of a 3D map and a camera pose to reduce the user operation load. Experimental results demonstrate that our system can work robustly in highly dynamic environments and initialize by itself without user assistance.

Author

Jun SHIMAMURA, Kyoko SUDO, Masashi MORIMOTO, Tatsuya OSAWA, Yukinobu TANIGUCHI

NTT Media Intelligence Laboratories, Nippon Telegraph and Telephone Corporation